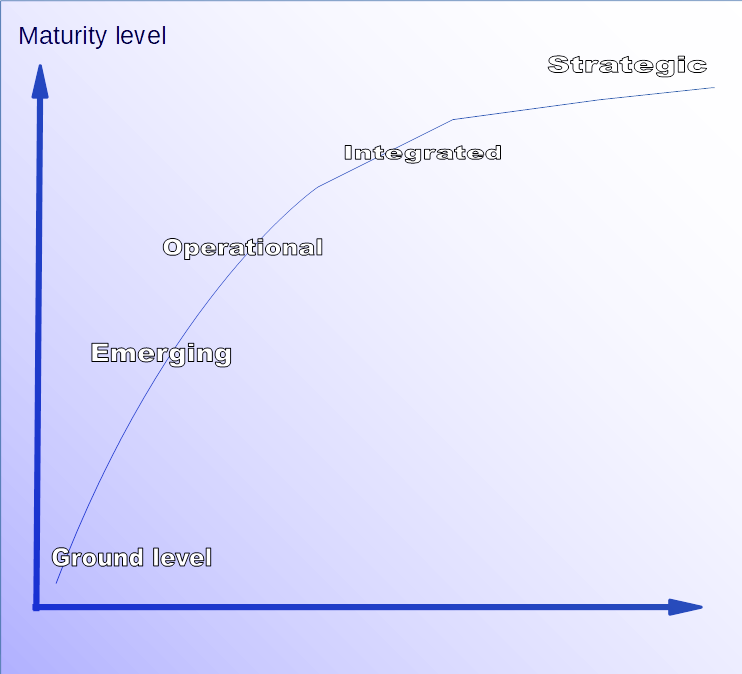

AI Maturity

Artificial Intelligence / Machine learning is here to stay. The opportunities are endless to create digital experiences that closely resemble human-like capabilities- automated decision-making, vision, speech recognition or natural language processing. AI systems are primarily data driven and classify, recommend, predict, group or segment based on advanced algorithms or models.

This means that traditional decision making is moving from “instinct” and more deterministic “business-rules” into the probabilistic area. This implies that an algorithm provides you with a probability. For example- a vision model may give you a probability that the picture you look at is one of a cat (or whatever type of object you may be interested in) or the probability that a loan application will likely become a profitable asset for a bank.

Thinking in terms of probabilities has a huge implication for organisations. What would you prefer in your decision making? Would you rather be approximately right or would you want to be precisely wrong? The former may not be optimal, but the latter could lead you very confidently to financial losses or reputation damage. And even more importantly, do you know where your AI systems stand? From experience in independently validating predictive models we recognise different levels of AI maturity.

Ground level

Organisations at the “ Ground level” are not generally not familiar or maybe even unaware of AI systems and their potential benefit.

Emerging

Organisations that have taken the first steps to develop and deploy AI systems. The typical approach is more ad-hoc or experimental. AI systems are often deployed in parallel to existing business processes to evaluate what this alternative approach may bring to the organisation. Usually a lot of freedom for developers exists to choose models and tools without having clear governance or formal controls in place. Independent validation of AI systems still a rare phenomenon. Organisations may (over) react when a model does not perform as expected, i.e. switch back to traditional- non probabilistic- decision making.

Operational

Organisations that have seen the benefits of AI in doing business. Very likely to have first hand experienced the possible downsides of using AI. Have identified the need to independently challenge model development choices and monitor the performance of AI systems. Starting to adopt the concept of a model life cycle which includes a periodic review of model performance, model use and need to adjust their AI / machine learning models. Starting to come to grips with the concept of formal and independent model validation.

Integrated

Organisations at this maturity level understand the concept of a model life cycle. Most organisations at this level have clearly defined purposes for their models, but room for improvement exists for legacy “emerging” and/or “operational” level models. A model in production is never finished. Recognise the need to – independently - periodically review and challenge AI data, implicit assumptions and possible translation errors between development/ prototypes and production ready solutions. Aware of data bias and data creepage. New business generated by the AI system is likely to alter the data on which the initial data driven solution was founded. Realignment with the business and recalibration is a must. Organisations at this maturity level start to balance accuracy and robustness of their AI systems.

Strategic

An organisation at this maturity level understands the need to design a model landscape to ensure business strategy is executed with AI. Has recognised model risk as an integral part of doing business. Has defined and reports on the risk appetite for model risk for its AI systems. Looks at the business case- with clear P&L objectives- for each model proposal and alignment with the overall model architecture. Periodic review and independent validation are formalised in the corporate governance and policies. The organisation has a very proactive approach to AI solutions. Clear AI standards are in place and working procedures how to work with AI- and logging human overrides for future improvement- are no mysteries. Anticipates business and regulatory challenges, AI solutions are more driven by design then by evolution.

Interested to learn more?